OK, so this off topic, but its so important that I had to blog about this. Let me give you some background. Last week I was traveling with my family on a road trip to Canada. Usually I’m super paranoid and never connect to any open wireless network (I pay for an carry my own mi-fi device due to this). Since we were in Canada I didn’t want to get hit with so many charges so I chanced it on a few networks. I made sure to connect to VPN as soon as I could, but there was still some time that I was not completely protected. At one point I thought that my gmail account had been hacked (further investigation proved this to be false thankfully). So I connected to a known network, applied VPN, double checked that my IP was routed through VPN and started changing my critical passwords. One of those was an account with BBVACompass bank. I initially setup this account a while back, and evidently I wasn’t as concerned with online security as I am now.

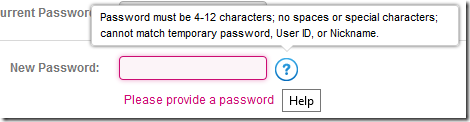

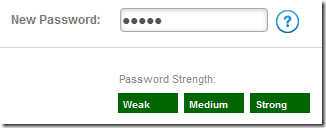

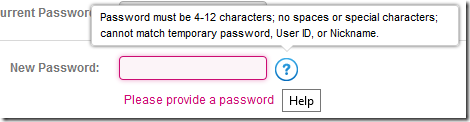

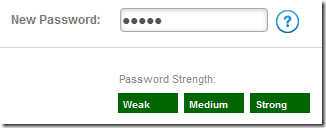

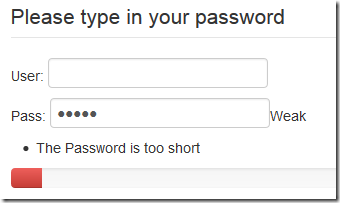

When I got to the password reset screen here is the tooltip indicating the password requirements.

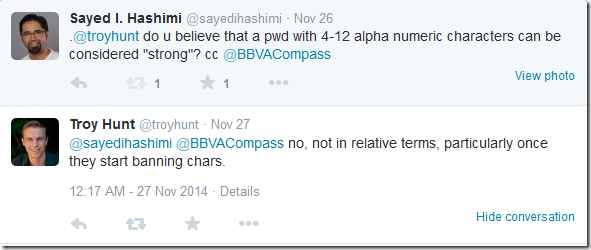

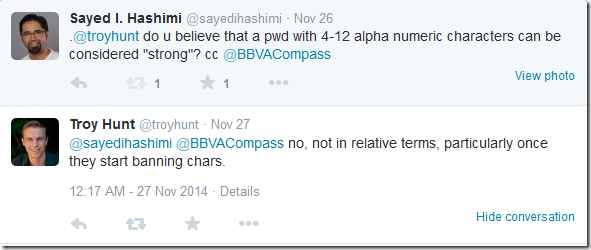

In developer terms that 4-12 alphanumeric only. You cannot use any special characters or spaces. I’m not a security expert so I reached out to @TroyHunt (founder of https://haveibeenpwned.com/ and recognized security expert) to see what his thoughts were on this. Here is his response.

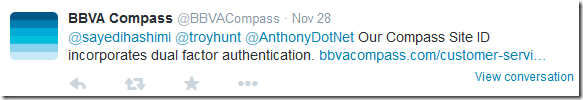

Later in the conversation BBVACompass chimed in stating

So I looked at the link http://www.bbvacompass.com/customer-service/online-banking/siteid.jsp to see if there was some other way to authenticate which was more secure. From what I understood from that link they have a service called “Site ID” which consists of the following.

- You enter three security questions/answers

- When logging in on a new machine you are prompted for security questions/answers and if the machine is “trusted”

- When logging in using a trusted machine the password is never submitted over the wire

The page that was linked to didn’t include any indication that this was “dual factor authentication” as the @BBVACompass twitter account tried to pass on me. I let them know that this is not two/dual factor auth. Even with “Site ID” if you log in on a compromised machine all security questions/answers and password can be stolen and users can effectively log in without me ever being notified. That defeats the purpose of two factor auth. With two factor auth if I sign into google on a compromised machine you will get my password but when you try and sign in later you’ll have to get access to my phone’s text messages as well. That is true two factor auth, not security questions.

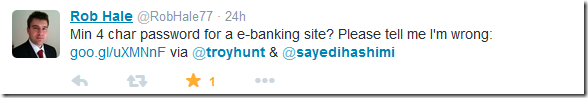

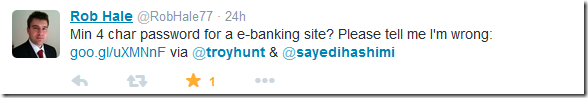

Another security expert @RobHale77 also chimed in later with the comments below.

What’s a strong password?

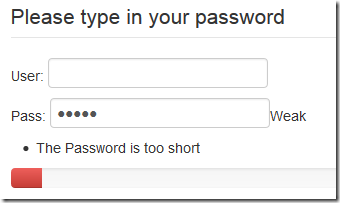

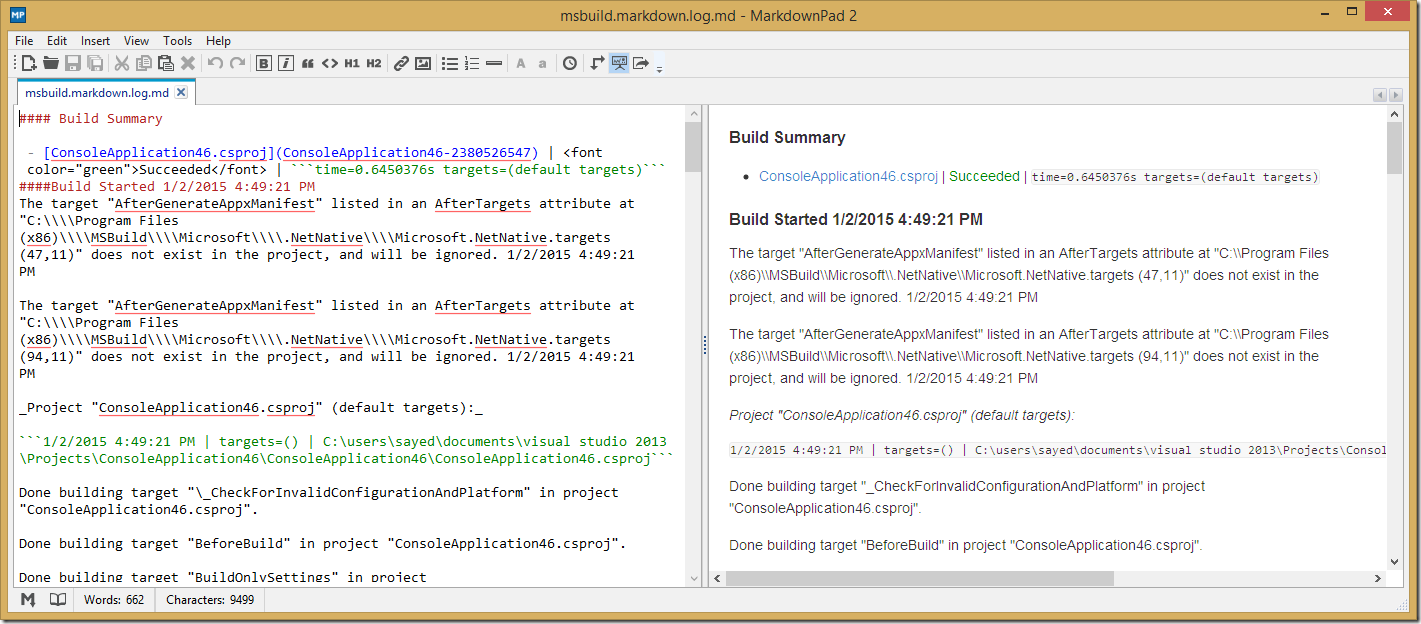

I decided to do a bit more investigation around how BBVACompass is representing password strength on their change password page. I guessed that the password strength field was being populated with JavaScript so I opened the site in my browser and use the in-browser dev tools to look at the code. Here is the getPasswordStrength function, comments were added by me.

03 | function getPasswordStrength(H){ |

09 | var F=H.replace(/[0-9]/g,""); |

10 | var G=(H.length-F.length); |

12 | var A=H.replace(/\W/g,""); |

13 | var C=(H.length-A.length); |

15 | var B=H.replace(/[A-Z]/g,""); |

16 | var I=(H.length-B.length); |

18 | var E=((D*10)-20)+(G*10)+(C*15)+(I*10); |

Then this is converted to weak/medium/strong with the following js function, once again comments were added by me.

01 | $.fn.passwordStrength = function( options ){ |

02 | return this.each(function(){ |

03 | var that = this;that.opts = {}; |

04 | that.opts = $.extend({}, $.fn.passwordStrength.defaults, options); |

06 | that.div = $(that.opts.targetDiv); |

07 | that.defaultClass = that.div.attr('class'); |

10 | that.percents = (that.opts.classes.length) ? 100 / that.opts.classes.length : 100; |

14 | if( typeof el == "undefined" ) |

16 | var s = getPasswordStrength (this.value); |

17 | var p = this.percents; |

18 | var t = Math.floor( s / p ); |

22 | t = this.opts.classes.length - 1; |

31 | .addClass( this.defaultClass ) |

32 | .addClass( this.opts.classes[ t ] ); |

Since the code is minified it somewhat difficult to follow. What I found was that a strong password consisted of the following.

- 3 numbers

- 1 upper case letter

- 1 lower case letter

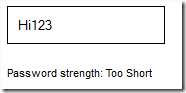

So I decided to try “Hi123” to see if I was right. Sure enough BBVACompass told me that the selected password Hi123 is a Strong password!

This is beyond insane. It contains a word and a sequence of 3 numbers (likely the most common sequence at that as well). BBVACompass, this is misleading at best. This is nowhere near strong, you are lying to your customers about the security of their passwords. Here are some passwords and how BBVACompass represents their strength. If you have an account you can verify this by going to the change password screen under Online Banking Profile.

This is beyond insane. It contains a word and a sequence of 3 numbers (likely the most common sequence at that as well). BBVACompass, this is misleading at best. This is nowhere near strong, you are lying to your customers about the security of their passwords. Here are some passwords and how BBVACompass represents their strength. If you have an account you can verify this by going to the change password screen under Online Banking Profile.

weak

- aaaa

- bbbb

- swrxwuppzx

- hlzzeseiyg

medium

- 1234

- a123

- HLZzESeiYG

- sWrXwUppZX

strong

- Hi123

- 123Ab

- 123Food

- 111Hi

- 111Aa

Why on earth is “hlzzeseiyg” weak and “111Aa” is strong?! Clearly this has been poorly implemented and misleading, fix it now.

What I would like to see as a consumer

My top recommendation for BBVACompass is to get a security expert/team involved to redo your online security, but if you cannot afford that then follow what’s below.

I’m not a security expert but here is what I recommended to BBVACompass as a consumer.

- Support for very strong passwords. Those that are >= 20 characters and allowing special characters

- Support for true two factor auth like password/text or password/call

- (stretch goal) Support to view an audit log of devices that have recently accessed my account

I am getting all of the above features from google currently.

Be more transparent about weak passwords

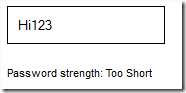

Now that I’ve seen the guts of their getPasswordStrength function I’d like to see BBVACompass implement a better function for reporting password strength. One that takes into account dictionary words, and common patterns. As stated I’m not a security expert but after a quick search I found http://www.sitepoint.com/5-bootstrap-password-strength-metercomplexity-demos/ which includes a pointer to live demo jquery.pwstrength.bootstrap (http://jsfiddle.net/jquery4u/mmXV5/) and StrongPass.js (http://jsfiddle.net/dimitar/n8Dza/). Below are the results for the same “Hi123” password from both.

As you can see if BBVACompass had used readily available Open Source tools to verify password strength we wouldn’t be having this conversation. Both reported the password as being unacceptable.

Accountability

As consumers we must hold our online service providers (especially banks) accountable for online security. For the tech savy bunch, it’s your responsibility to educate your non-tech friends/family about online security and strong passwords.

As a bank, BBVACompass, needs to hold their development team accountable for providing customers with secure access to accounts online as well as honest indications for password strength. You’re being dishonest, which means I cannot trust you.

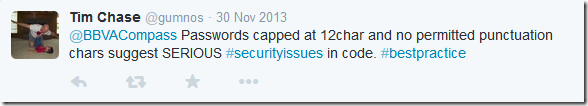

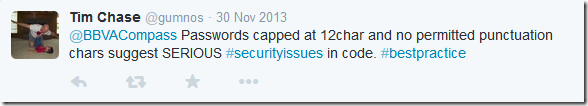

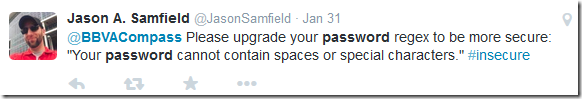

Previous pleas ignored by BBVACompass

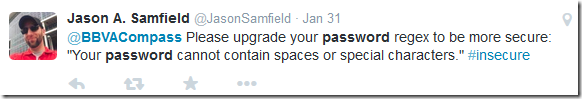

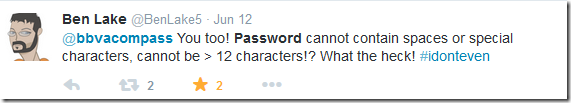

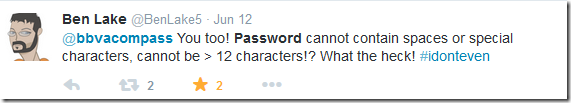

I did a search on twitter for @BBVACompass password and discovered that this has been brought up multiple times by customers. The first of which I found occurred in November 2013! Tweets below.

https://twitter.com/gumnos/status/406880230670749696

https://twitter.com/gumnos/status/406880230670749696

https://twitter.com/JasonSamfield/status/429448195119136768

https://twitter.com/BenLake5/status/477262736208822273

https://twitter.com/BenLake5/status/477262736208822273

BBVACompass, your customers have spoken and we are demanding better online security. Now is the time to act. I’ve already closed my account and I’ll be advising all friends/family with a BBVACompass account to do the same. With recent security breaches of Sony/Target/etc you need to start taking online security more seriously. This blog post and twitter comments may end up with a few accounts closing, but if your customers experience wide spread hacking then it will be much more severe. Fix this before it is too late, this should be your top development priority IMO.

My Promise to BBVACompass

BBVACompass if you support passwords >= 20 characters with special charcters within 90 days I will re-open my account with the same funds as which I closed it the next time I’m in Florida.

Note: please post comments at http://www.reddit.com/r/technology/comments/2o4uat/i_closed_my_bbvacompass_account_because_they/.

Sayed Ibrahim Hashimi @SayedIHashimi

Comments are closed.